network

URL - url string 관련 클래스

URLConnection - 접속 관련 클래스

- google geolocation xml(json)

- jstl (proxy)

=> urllib 패키지

Socket

https://docs.python.org/ko/3/library/urllib.html

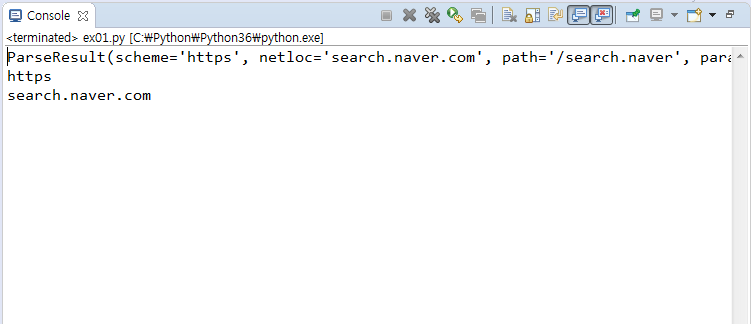

URLEx01.ex01 - urlparse

from urllib.parse import urlparse

url = urlparse('https://search.naver.com/search.naver?where=nexearch&sm=top_hty&fbm=1&ie=utf8&query=starwars')

print(url)

from urllib.parse import urlparse

url = urlparse('https://search.naver.com/search.naver?where=nexearch&sm=top_hty&fbm=1&ie=utf8&query=starwars')

print(url)

print(url.scheme)

from urllib.parse import urlparse

url = urlparse('https://search.naver.com/search.naver?where=nexearch&sm=top_hty&fbm=1&ie=utf8&query=starwars')

print(url)

print(url.scheme)

print(url.hostname)

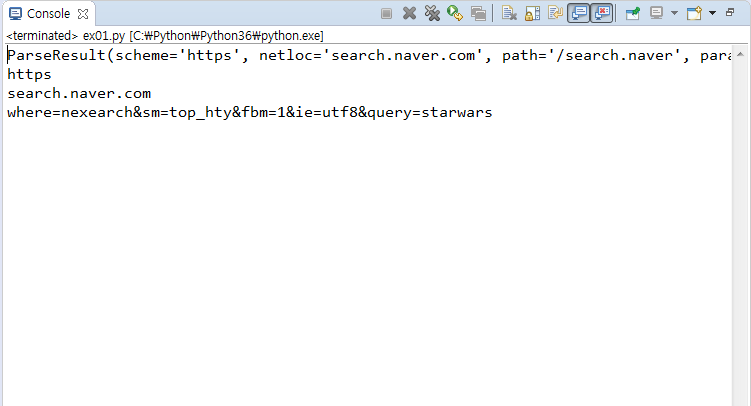

from urllib.parse import urlparse

url = urlparse('https://search.naver.com/search.naver?where=nexearch&sm=top_hty&fbm=1&ie=utf8&query=starwars')

print(url)

print(url.scheme)

print(url.hostname)

print(url.query)

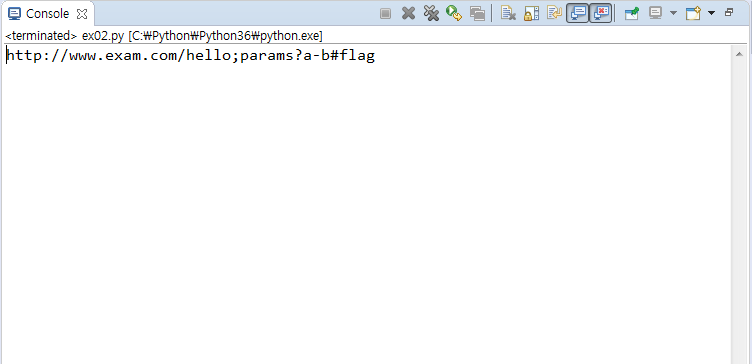

URLEx01.ex02 - urlunparse

from urllib.parse import urlunparse

url = urlunparse(('http', 'www.exam.com', '/hello', '', '', ''))

print(url)

from urllib.parse import urlunparse

url = urlunparse(('http', 'www.exam.com', '/hello', 'params', 'a-b', 'flag'))

print(url)

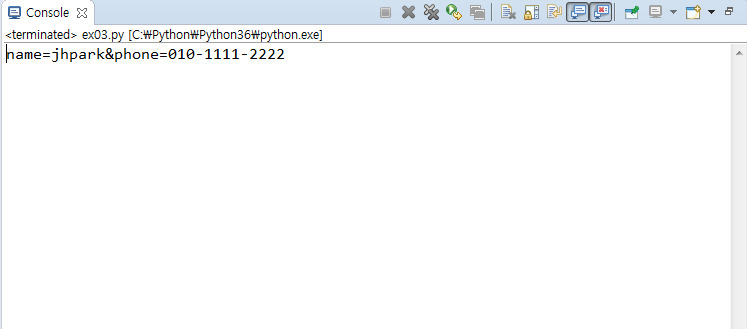

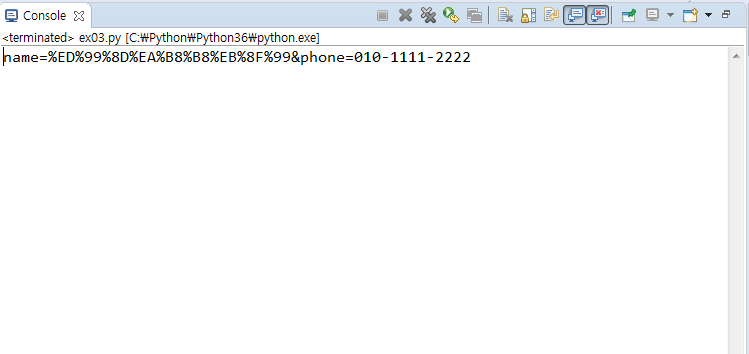

URLEx01.ex03 - urlencode

from urllib.parse import urlencode

form = { 'name': 'jhpark', 'phone': '010-1111-2222'}

encform = urlencode(form)

print(encform)

from urllib.parse import urlencode

form = { 'name': '홍길동', 'phone': '010-1111-2222'}

encform = urlencode(form)

print(encform)

from urllib.parse import urlencode, parse_qs

form = { 'name': '홍길동', 'phone': '010-1111-2222'}

encform = urlencode(form)

print(encform)

#name=%ED%99%8D%EA%B8%B8%EB%8F%99&phone=010-1111-2222

qsform = parse_qs('name=%ED%99%8D%EA%B8%B8%EB%8F%99&phone=010-1111-2222')

print(qsform)

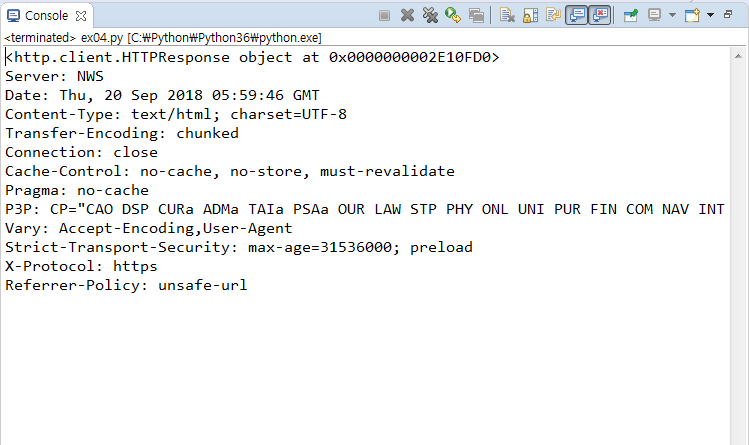

URLEx01.ex04 - urlopen

from urllib.request import urlopen

urldata = urlopen('https://m.naver.com')

print(urldata)

from urllib.request import urlopen

urldata = urlopen('https://m.naver.com')

print(urldata)

print(urldata.headers)

from urllib.request import urlopen

urldata = urlopen('https://m.naver.com')

print(urldata)

print(urldata.headers)

html = urldata.read();

print(html)

from urllib.request import urlopen

urldata = urlopen('https://m.naver.com')

print(urldata)

print(urldata.headers)

html = urldata.read();

print(html.decode('utf-8'))

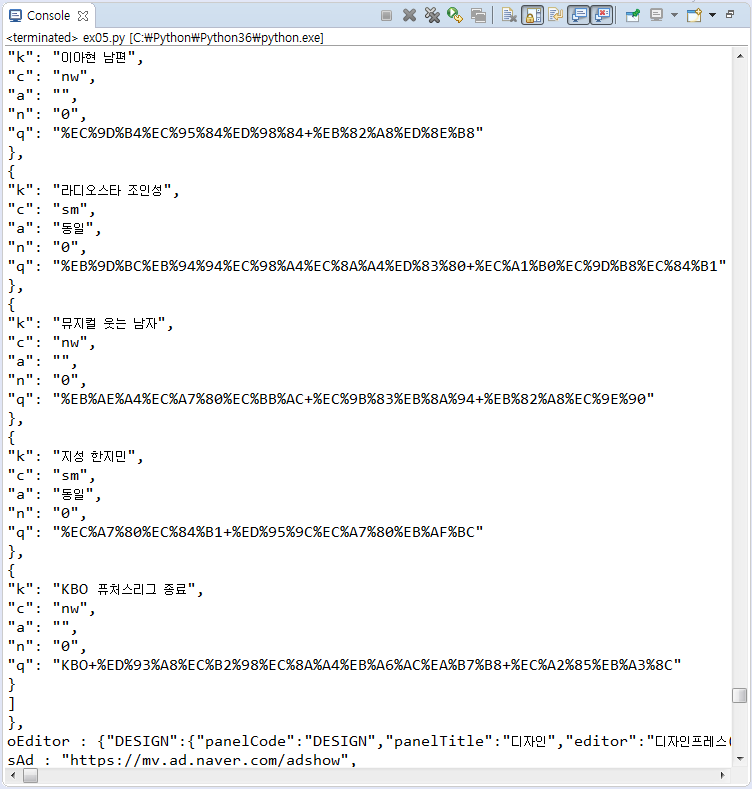

URLEx01.ex05 - Request, urlopen : url 객체를 만들어서 차례대로 open하는 것

from urllib.request import Request, urlopen

url = 'https://m.naver.com'

req = Request(url)

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8'))

from urllib.request import Request, urlopen

from urllib.parse import urlencode

# https://search.naver.com/search.naver

# ?

# where=nexearch&sm=top_hty&fbm=1&ie=utf8&query=%EC%8A%A4%ED%83%80%EC%9B%8C%EC%A6%88

url = 'https://search.naver.com/search.naver?'

querystring = {'where': 'nexearch', 'sm': 'top_hty', 'fbm': '1', 'ie': 'utf8', 'query': '스타워즈'}

req = Request(url + urlencode(querystring))

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8'))

위와 같이 HTTPError: HTTP Error 403 에러가 떴는데 이는 정상적인 브라우저로 명령을 준게 아니라서 방화벽이 발생 한 것이다

from urllib.request import Request, urlopen

from urllib.parse import urlencode

# https://search.naver.com/search.naver

# ?

# where=nexearch&sm=top_hty&fbm=1&ie=utf8&query=%EC%8A%A4%ED%83%80%EC%9B%8C%EC%A6%88

url = 'https://search.naver.com/search.naver?'

querystring = {'where': 'nexearch', 'sm': 'top_hty', 'fbm': '1', 'ie': 'utf8', 'query': '스타워즈'}

req = Request(url + urlencode(querystring), headers= {'User-Agent': 'Mozilla/5.0'})

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8')

Mozilla 5.0버전으로 User-Agent를 사용한다고 속여서 headers에 포함시켜서 실행하게 되면 위와 같이 정상적으로 데이터를 받아올 수 있다.

다음 용도도 만들어 보자

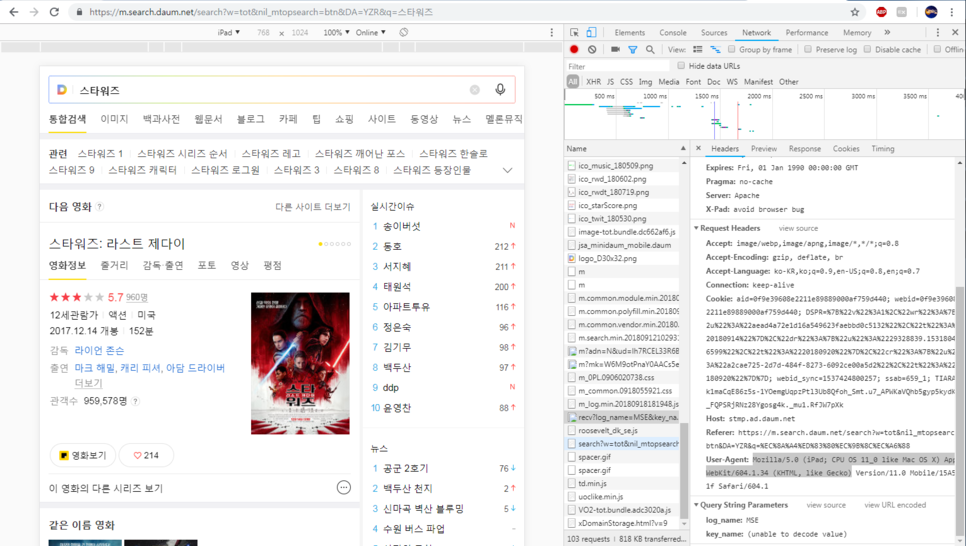

Ex01.ex06 - User-Agent를 잘 적어줘야 한다. 개발자모드 활용

from urllib.request import Request, urlopen

from urllib.parse import urlencode

# https://search.daum.net/search

# ?

# w=tot&DA=YZR&t__nil_searchbox=btn&sug=&sugo=&q=%EC%8A%A4%ED%83%80%EC%9B%8C%EC%A6%88

url = 'https://search.daum.net/search?'

querystring = {'w': 'tot', 'DA': 'YZR', 't__nil_searchbox': 'btn', 'sug': '', 'sugo': '', 'q': '스타워즈'}

req = Request(url + urlencode(querystring), headers= {'User-Agent': 'Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko'})

#키 값

# User-Agent Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8'))

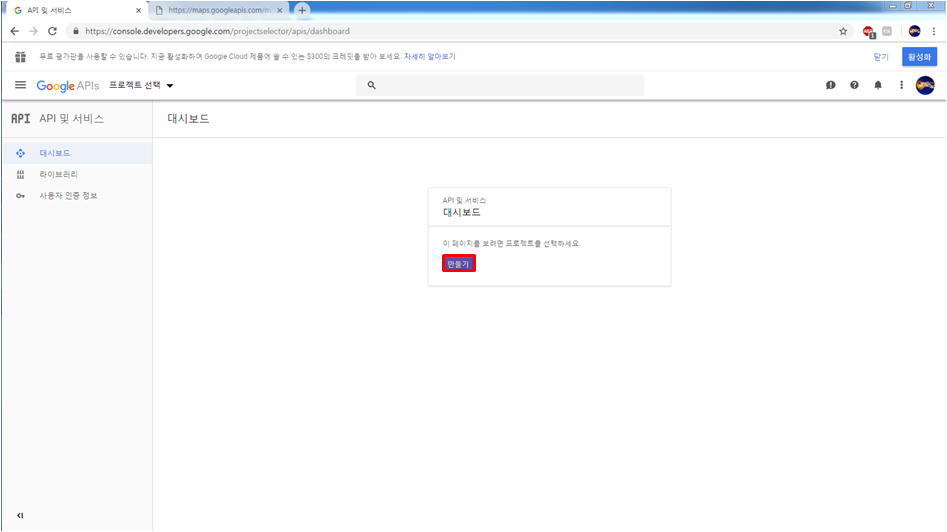

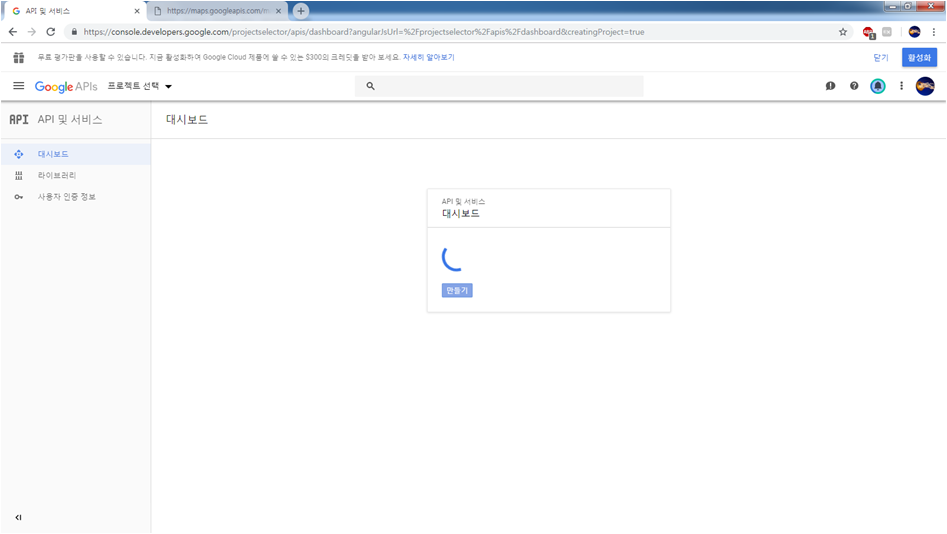

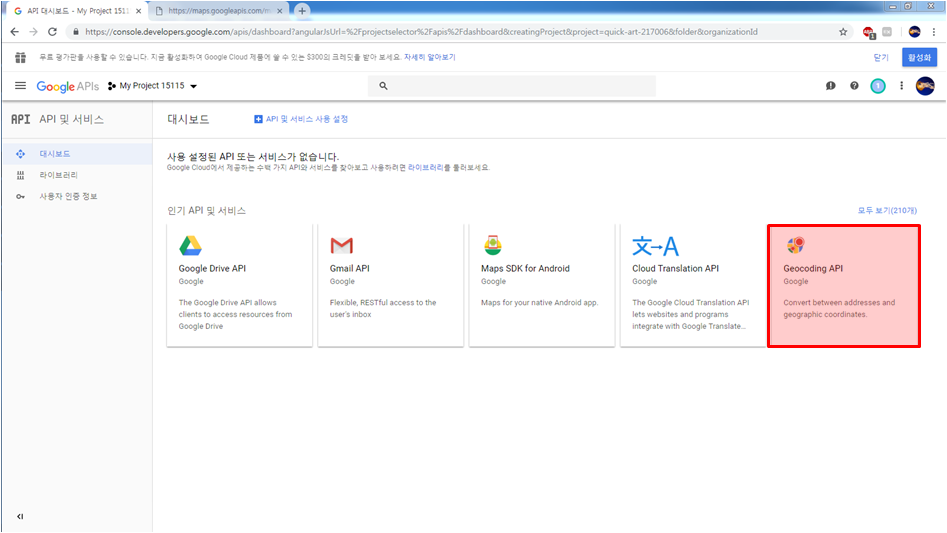

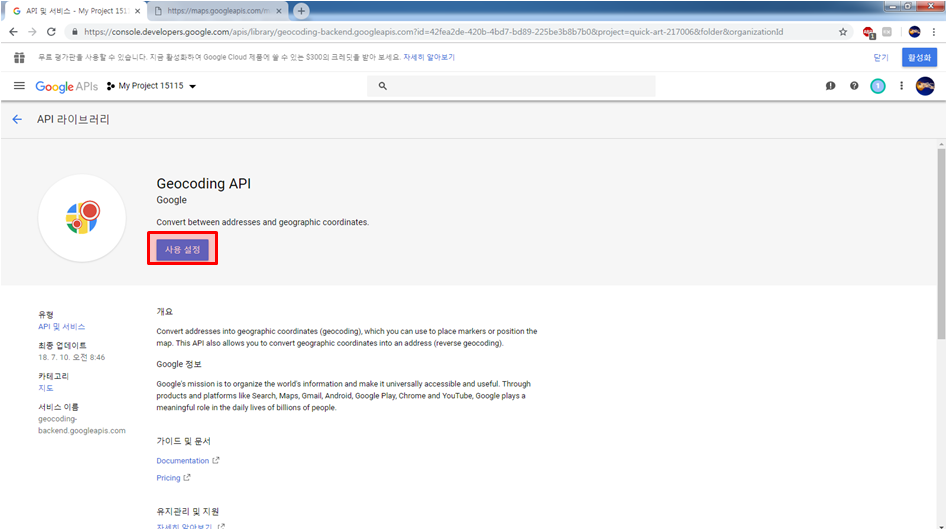

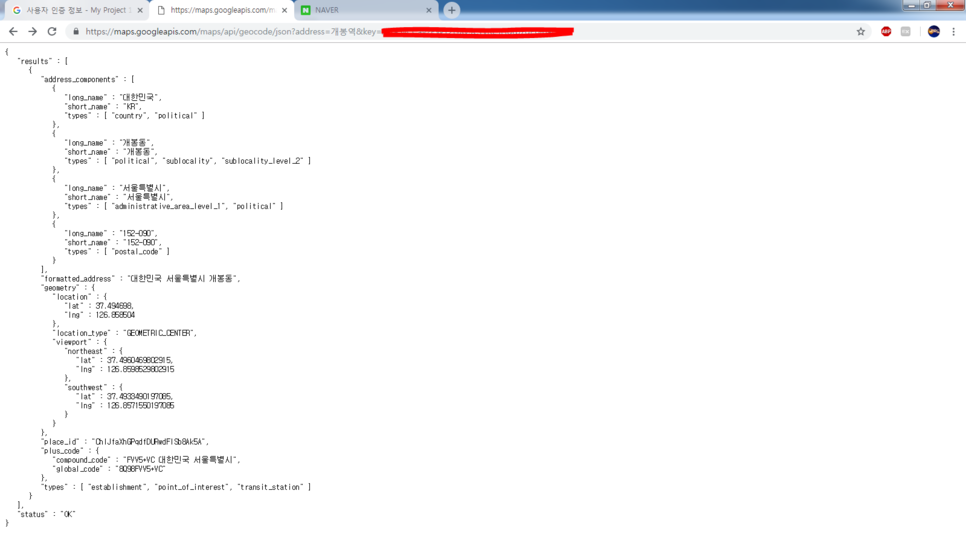

geocoding

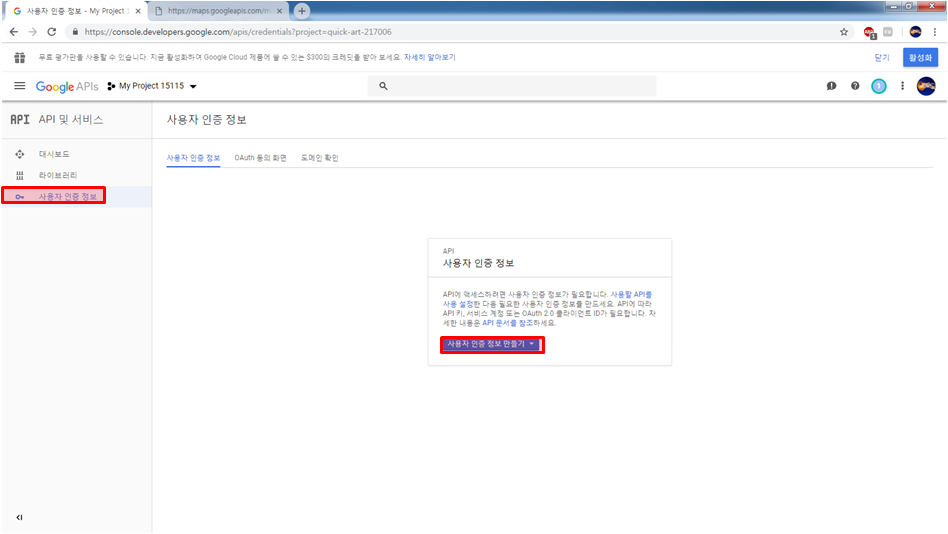

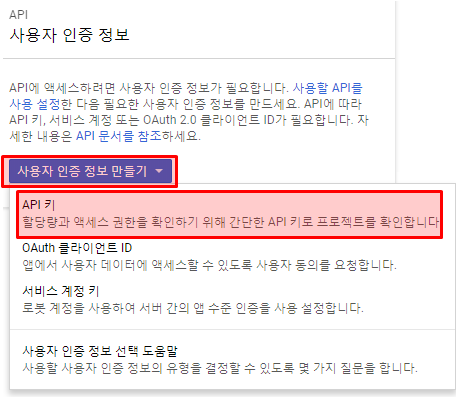

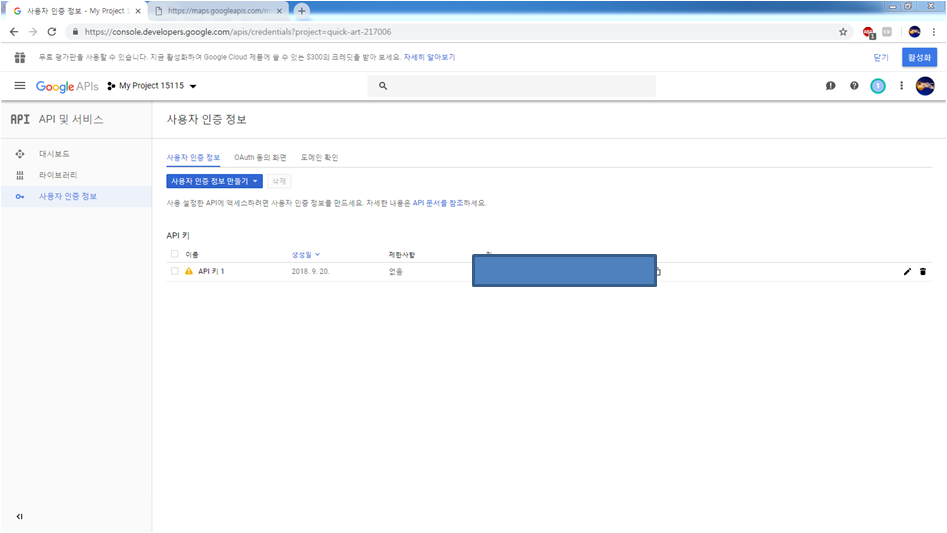

기존 구글에서 Geocoding API로

https://maps.googleapis.com/maps/api/geocode/json?address=경복궁

하면 경복궁을 지도에서 확인할 수 있었는데 지금은 과금정책으로 바뀌어 버렸다.

일반 사용자는 무료로 일단 사용은 가능하니 해보자(현재 구글 로그인 상태)

역이름을 입력 받으면 json이 출력되게 해보자

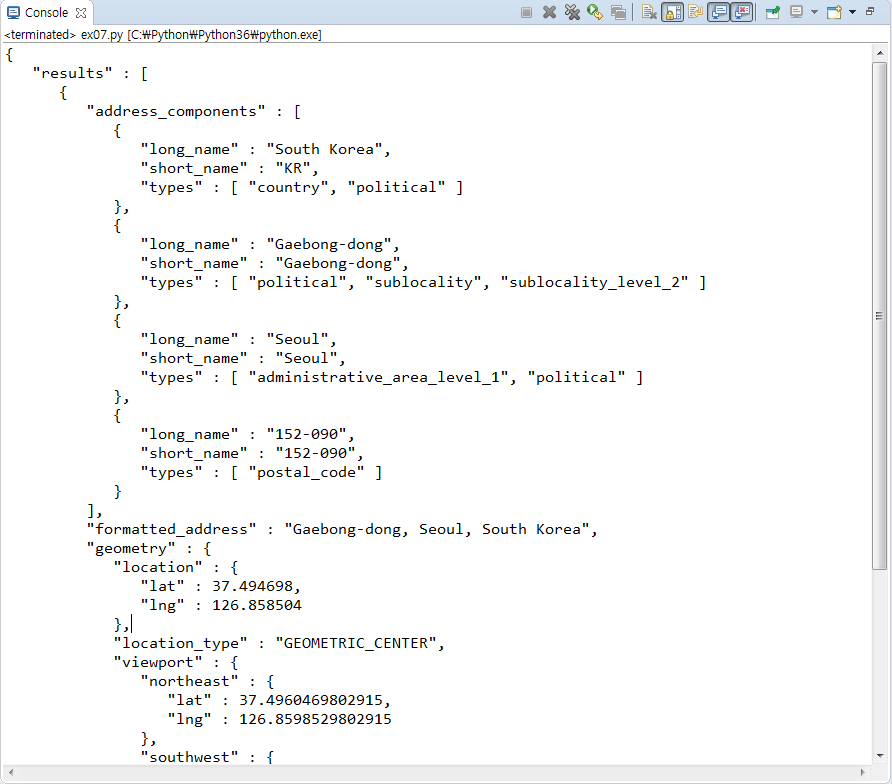

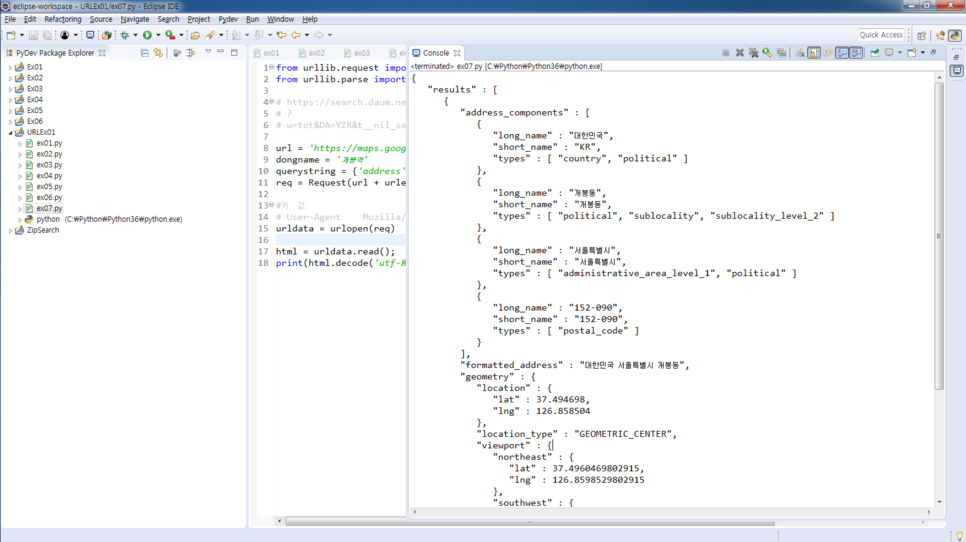

Ex01.ex07

from urllib.request import Request, urlopen

from urllib.parse import urlencode

# https://search.daum.net/search

# ?

# w=tot&DA=YZR&t__nil_searchbox=btn&sug=&sugo=&q=%EC%8A%A4%ED%83%80%EC%9B%8C%EC%A6%88

url = 'https://maps.googleapis.com/maps/api/geocode/json?'

dongname = '개봉역'

querystring = {'address': dongname, 'key': 'Geocoding API키 입력'}

req = Request(url + urlencode(querystring), headers= {'User-Agent': 'Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko'})

#키 값

# User-Agent Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8'))

결과값이 영어로 나오긴 하지만 위도, 경도등 비교해보면 똑같이 나온다.

한국말로 하는 방법은 headers안에 accept-language: ko-KR을 넣어준다.

from urllib.request import Request, urlopen

from urllib.parse import urlencode

# https://search.daum.net/search

# ?

# w=tot&DA=YZR&t__nil_searchbox=btn&sug=&sugo=&q=%EC%8A%A4%ED%83%80%EC%9B%8C%EC%A6%88

url = 'https://maps.googleapis.com/maps/api/geocode/json?'

dongname = '개봉역'

querystring = {'address': dongname, 'key': 'AIzaSyArHE1hXsBVVHAr1W1eUjLOp34W6hcybIU'}

req = Request(url + urlencode(querystring), headers= {'accept-language': 'ko-KR', 'User-Agent': 'Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko'})

#키 값

# User-Agent Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8'))

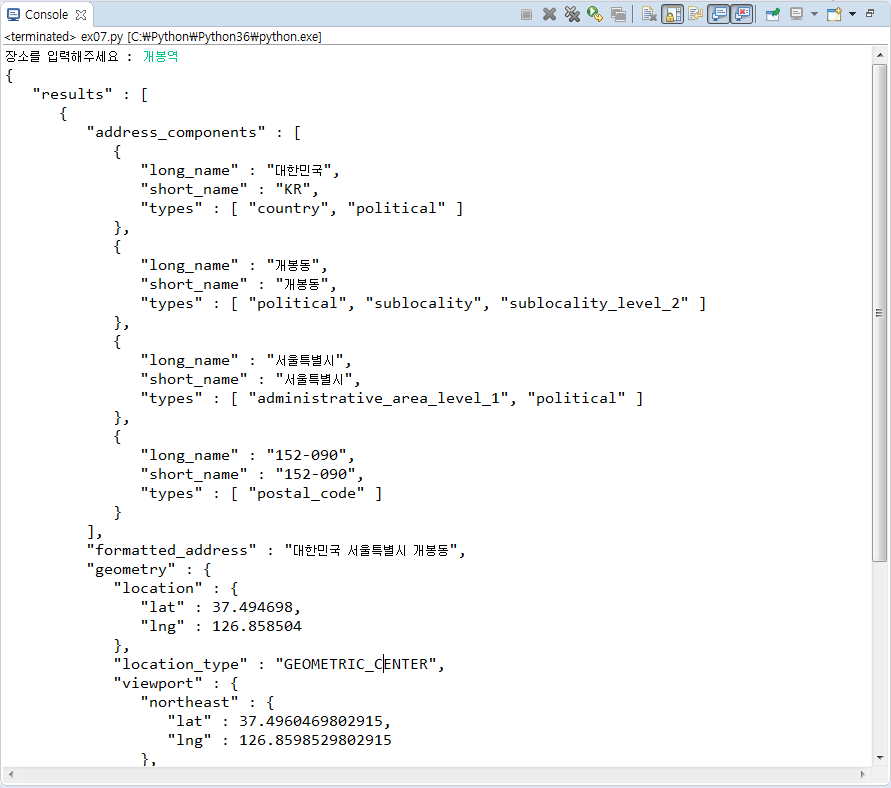

위치를 입력받아서 검색하게 해보자

from urllib.request import Request, urlopen

from urllib.parse import urlencode

# https://search.daum.net/search

# ?

# w=tot&DA=YZR&t__nil_searchbox=btn&sug=&sugo=&q=%EC%8A%A4%ED%83%80%EC%9B%8C%EC%A6%88

dongname = input('장소를 입력해주세요 : ')

if(len(dongname) <= 1):

print('장소를 한 자 이상 입력해 주세요')

exit()

url = 'https://maps.googleapis.com/maps/api/geocode/json?'

querystring = {'address': dongname, 'key': 'AIzaSyArHE1hXsBVVHAr1W1eUjLOp34W6hcybIU'}

req = Request(url + urlencode(querystring), headers= {'accept-language': 'ko-KR', 'User-Agent': 'Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko'})

#키 값

# User-Agent Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko

urldata = urlopen(req)

html = urldata.read();

print(html.decode('utf-8'))

'Web & Mobile > Python' 카테고리의 다른 글

| Lecture 94 - Python(11) WordCount Method (0) | 2019.08.14 |

|---|---|

| Lecture 90 - Python(10) xml, html, json 처리법, 정규식 사용법 (0) | 2019.08.06 |

| Lecture 88 - Python(8) 파이썬을 이용한 우편번호 검색기, nCloud에 python 3 설치 (0) | 2019.08.01 |

| Lecture 86 - Python(7) 파이썬에 데이터베이스 연결 (0) | 2019.07.30 |

| Lecture 85 - Python(6) 패키지, 내시스템정보확인, 시간, 날짜, webbrowser (0) | 2019.07.26 |

댓글